Features, and Bugs, and Tech Debt, Oh My!

Posted 5/5/2023

We've all encountered this in our tech careers. We plan out a fantastic feature. As we're building it, we come across some areas we know are not being built with our best practices in mind, and we've found some low-priority bugs through testing that we want to fix, but that deadline is approaching quickly. It's ok, though; we'll incur just a bit of tech debt and promptly resolve it right after launch. Everyone is on board, the feature looks good to go, and we release it. Then WHAM! We get hit with unexpected bugs. We tested everything, completed end-to-end testing, and have automation coverage and unit test coverage. What's happening? We now have tech debt and live production bugs immediately after our new feature release. So what do we do? How do we handle this? We have another feature we're supposed to work on in 2 weeks, but that isn't enough time to fix all the bugs and tech debt.

It's best to remember that this situation happens to everyone, but there are some ways to approach it that can help your team and your company. Let's break this down into smaller pieces.

Jump To A Specific Topic

"By failing to prepare, you are preparing to fail." - Benjamin Franklin

Planning - PDLC and SDLC

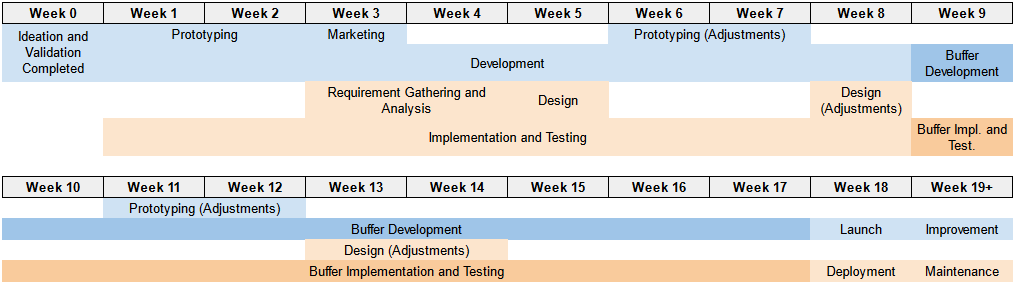

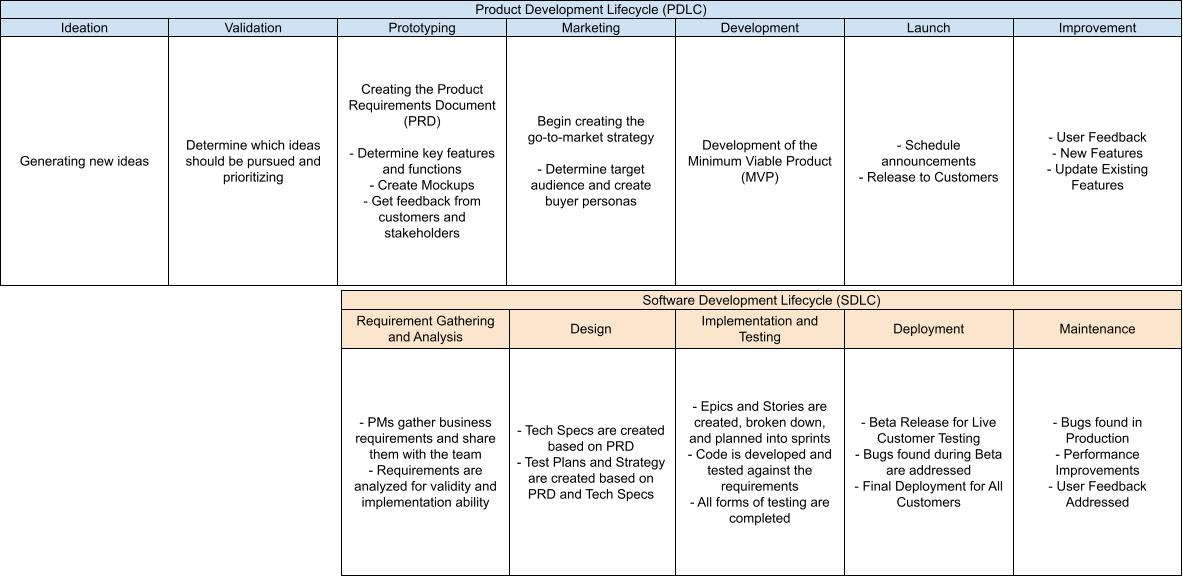

To fully understand planning, you will need to understand the Product Development Lifecycle (PDLC) and the Software Development Lifecycle (SDLC). A common mistake I've seen companies make is treating the PDLC and SDLC as separate processes. Let's start by breaking these down and talking about where they integrate and what a perfect flow would look like from a planning perspective.

As you can see from the graphic above, the PDLC starts us off with the Ideation and Validation phases. This is where new ideas are brainstormed and decided upon. These are the only two phases where the software development teams are not involved. Once we get into the Prototyping phase, the SDLC also kicks in.

Prototyping/Requirement Gathering and Analysis

- Delivery teams need product requirements to begin contributing to the feature. Once product requirements are determined, they are shared with the development team. These are analyzed for validity and implementation ability. It is usual for requirements to change during this phase as the product manager and development teams align on implementation abilities. This phase must occur so developers and testers can give input as early in the process as possible to reduce scope creep potential during the Development/Implementation and Testing phases

Marketing/Design

- Design and Marketing do not have to overlap, but in the natural progression, this is where they do overlap. Design can begin once requirements are settled, mockups are created, and the development team is comfortable with the information provided through the PRD. This design phase is where tech specs, test plans, and test strategies are created. This phase should be completed before any development occurs to help reduce the amount of scope creep and unknowns going into development. At times, gotchas will be found during the design phase that may influence or change the PRD. This is also where the MVP is usually finalized based on the complexity and time it takes to develop certain features.

- This is where the final stage of planning occurs. All designs are completed, product requirements are set, and the team is comfortable with the feature they are about to build and test. This is the stage where we break down epics, and stories, go through grooming and planning, and deadlines can be set. Once work is planned into sprints, the code is developed, and all forms of testing are completed.

Launch/Deployment

- After all development and testing are completed, this is the stage where a Beta product and finalized product are released to customers. During Beta, bugs are addressed, and the feature is improved upon until it meets quality standards and all business requirements are met. At this time, the finalized feature is released to customers.

Improvement/Maintenance

- We are not done with a feature once it is released to production; ongoing maintenance must occur. Time should be planned for improvements, adding onto our MVP with additional features, addressing bugs found, improving performance, and addressing user feedback. At this point, the PDLC may start over depending on the size of the requested features.

"An hour of planning can save you 10 hours of doing." - Dale Carnegie

Planning Buffers and Scenarios

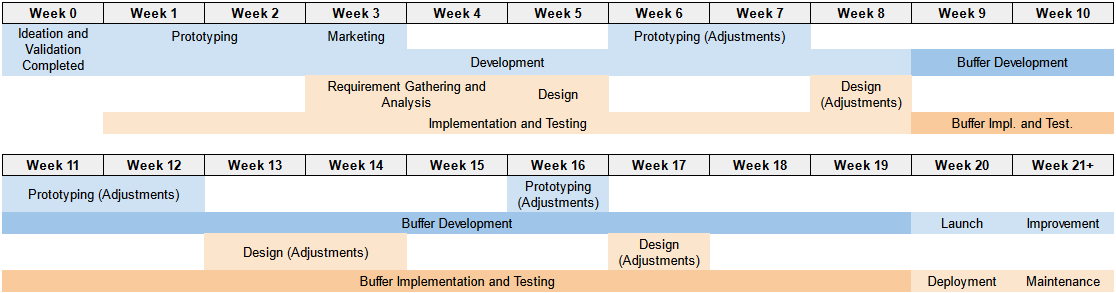

Now that you know a bit about the PDLC and SDLC, how does planning within these processes affect our tech debt and bug counts? More often than not, tech debt accumulates because the feature wasn't planned adequately throughout the PDLC and SDLC. When the Prototyping/Requirement Gathering and Analysis phase and the Desing phase are rushed through or cut short for time, we adjust the PRD, Tech Specs, and Test Plans while in the middle of the Implementation phase. At times, I have even seen teams start development on extensive features without a PRD, Tech Spech, or Test Plans. If we expect teams to learn as they go, we must build buffer time to account for lessons learned. Still, generally speaking, beginning development should be avoided until the PRD, Tech Spech, or Test Plans are completed. Let's look at some scenarios you may encounter when planning new features.

All scenarios below will start with these basic expectations: 6 weeks for development, 1 week for end-to-end testing, and 1 week to address bugs found during end-to-end testing. This results in a baseline 8 development-week project.

-Design Complete-

Low-Risk

This is our ideal scenario. We've completed the PRD, Tech Spec, and Test Plans, we have minimal unknowns, and we have completed a similar project before. We will keep this estimate at an 8 development- week project since all designs are complete and the risk is low. This scenario gives us extra confidence in our estimate that we will not have in other scenarios.

High-Risk

Let's say we've completed the PRD, Tech Spec, and Test Plans; however, we still have many unknowns, and there are some new coding patterns we are working with, or this is an entirely new technology we haven't worked with. Instead of giving an eight-week estimate for this project, let's bump this up to a 10-development-week project. This allows us to address the critical tech debt we will likely find along the way and still allows testers enough time to thoroughly test the code before it's released to customers.

-Only the PRD-

Low-Risk

This is a common scenario I'm sure many of you have seen. We have the product requirements set, but we didn't take the time to build a tech spec or test plan before starting development. The project is pretty low risk, but without proper design, we will likely run into some unexpected changes that need to be made along the way. Because of this, we will add a mid-sized buffer into our time, resulting in 13 development weeks for the project. This gives the team time to solidify the design while also developing and allows them time to pivot their approach as needed while maintaining quality standards.

The total project length will be the same as a high-risk project with a complete design, but the developers and testers will be working for an additional 3 weeks on this project due to a lack of planning.

High-Risk

Several teams have found themselves accidentally in this scenario. Typically it happens when the team feels they have a low-risk project and follow the above scenario, but then they get into it and realize there are many unknowns. This raises the project into the high-risk category. A project can also land in this category if the changes being made have a widespread effect downstream if they are not appropriately handled, among reasons noted in other high risk scenarios. When we have a project that only has a PRD and is also high risk, we need a medium-large buffer so we have time to pivot due to risks encountered, addressing unknowns, design changes during development, and maintaining quality. Because of this, we will increase this project to 15 development weeks. This equates to 7 weeks of buffer for the project.

-No Planning-

Low-Risk

A critical feature request has come in, and we need to begin development immediately. We know the primary problem is being solved, but no PRD, Tech Spec, or Test Plan has been created. They're being worked on but won't be completed for at least 2 weeks while critical conversations are had. Our knowledge is currently limited, and we expect things to change as we develop this feature. The project, in general, is low risk, but we have heightened risk due to not having any planning done. Because of this, we need a large buffer of 9 weeks, landing us at 17 weeks for the development phase. This allows us to adjust course and address business requirements that are unknown today while also giving us enough time to test thoroughly throughout the process.

The total project length will be the same as a high-risk project that only has a PRD complete, but the developers and testers will be working for an additional two weeks on this project due to a lack of planning.

High-Risk

This scenario I hope you never find yourself in. When a project has no planning completed and it's high risk, a lot can go wrong. Suppose you find yourself working with new technology, complex, high-risk changes, new coding patterns, difficult-to-test features, or many unknowns. In that case, it is worth planning before starting the project to help reduce scope creep, tech debt, and pivoting during development. That being said, if taking this approach is necessary, we need the maximum amount of buffer time possible. The buffer for this project is 11 weeks which lands us at 19 weeks of development.

Planning Scenario Takeaways

While looking through the different scenarios, you may have noticed a few patterns.

- Moving from a low-risk project to a high-risk project in the same category automatically adds 2 weeks to the development time. This is because teams need the time to address the tech debt and bugs discovered within those high-risk projects before releasing.

- The less planning is completed, the more time is needed to complete the project. Moving from fully designed into only PRD adds 5 weeks, and moving from only PRD to no planning adds 4 weeks. This is because a lack of planning automatically increases the risk of the project, and there needs to be an additional buffer to account for the in-flight changes that will occur.

Breaking it down a little more plainly: a project adequately planned out will take 9 weeks less than the same project that isn't planned out. This occurs because developers will start working but need to change their approach mid-development, which leads to rework, additional testing, and additional tech debt. This may seem drastic when looking at it on paper, and that may be true for some companies and projects. Still, from my own experiences, I can honestly say I've seen 3-month projects balloon into 9-month projects because of this exact situation.

If you would like to learn more about what happens if these buffers are not in place from a quality standpoint, I will dive deeper into these scenarios in the Quality Output section.

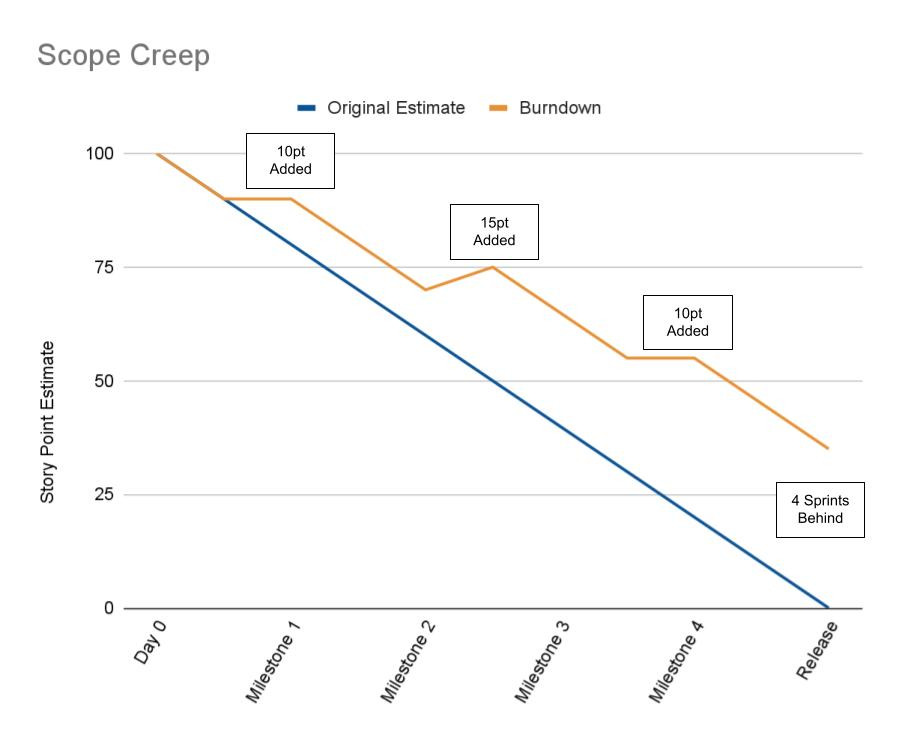

Scope Creep

One thing improper planning can also lead to is Scope Creep. While buffers can be put in place to help with this, scope creep can still derail an entire project leading to heavy overtime, decreased quality, missed deadlines, and burnout.

We've all experienced this. We started with a defined project, but three weeks in, we added five requirements we realized were missing. Two weeks later, we added another two requirements. The business still expects us to deliver on the same date even with these seven additional requirements we realized we must have for the feature to work correctly. When this happens, many different outcomes typically occur, such as:

- Dropping automation

- Skipping end-to-end testing

- Canceling the beta phase

- Releasing with known bugs, we don't have time to address

- A lesser pattern that isn't as clean and isn't up to our standards is utilized for coding

- Dropping unit testing coverage

- Overtime and weekend work to hit deadlines

These situations inevitably lead to increased bug count and tech debt, heightened stress, and decreased customer and employee retention. This has several other potential adverse side effects, but this is the general picture and the most frequent pattern I've experienced.

Avoiding scope creep through adequate planning is your best bet. Being able to promise deadlines and hit those deadlines without any trade-offs is the overall goal. However, when scope creep occurs, a trade-off always occurs. There is no way around that, but the exchange can look very different in different situations. The additional scope could be traded for one of the three options below to continue developing a quality product while ensuring teams don't burn out.

-Extended Timeline-

Pros

- Releasing a quality product improves customer retention and customer growth

- Employees maintain work-life balance and avoid burnout

- A less stressful work environment improves employee retention

- The team learns how long this type of project will take in the future so they can plan accordingly

- Fewer bugs and tech debt

Cons

- Board-promised deadlines are missed. This can lead to lesser funding in the future if not handled properly and communicated effectively

- Customers might be upset at the delay if they were aware of the upcoming release date

- This can lead to teams feeling it's ok to plan improperly and move deadlines

- Delays upcoming project deadlines

-Additional Allocation-

Pros

- If addressed early in the project, the deadline will not need to be shifted, overtime will not be required, and employees can maintain a work-life balance and avoid burnout

- Releasing a quality product improves customer retention and customer growth

Cons

- Slows down other vital projects, leading them to need extended timelines for completion

- Bringing in team members who are not familiar with the project slows the current team down to help with ramp up

- More significant possibility for bugs to escape due to insufficient expertise from newly added team members

- Employees who are moved may feel resentment from being forced to change teams and drop their projects

- This can lead to teams expecting additional allocation any time they are falling behind, and this is typically not sustainable

- This may still lead to overtime and burnout due to the reduction in speed needed to ramp up new team members

- The team did not learn how long this project would have taken, so that proper planning will be a challenge in the future

Note

This approach is not always possible. In some situations, we will run into a "too many cooks in the kitchen" scenario where all of the work must be completed in one repository so additional developers would not increase development speed.

-Reducing MVP Scope-

Pros

- Releasing a quality product improves customer retention and customer growth

- Employees maintain work-life balance and avoid burnout

- A less stressful work environment improves employee retention

- The team learns how long this type of project will take in the future so they can plan accordingly

- Fewer bugs and tech debt

- Deadlines remain the same and are met, keeping the board and customers happy

- No upcoming projects or additional current projects are delayed

- The team feels good about the completion of their project by taking full ownership of it and hitting the deadlines

- The team learns what is needed as MVP in the future for similar projects to plan accurately

Cons

- A follow-up of version 2 may need to be completed sooner than expected, and future deadlines may shift due to pushing the scope into future releases

As you can see, once scope creep occurs, different trade-offs can happen with different pros and cons depending on the approach. Approaches are not a one size fits all situation, but generally speaking, reducing the MVP scope and trading out non-necessary features has the most minor negatives. Maintaining quality standards when deploying new features into production while keeping your company culture in mind is essential. Decreased customer retention and increased employee turnover can become a quick and slippery slope that will be difficult to recover from.

Out With the Old, In With the New

Old Coding Patterns

It's inevitable. There is no way around this happening over time. Coding patterns change, and best practices change, as they should! The tech industry is constantly evolving, growing, and everyone is learning. The issue is that while we learn improved and faster ways to develop code, our existing code base isn't consistently updated to match our best practices. Updating old code takes time, and it's hard to allocate time for just rewriting code when we could be building new features our customers are asking for. Unfortunately, this tech debt typically leads to slower development, higher bug count, and degraded performance.

The key to fixing this type of tech debt is advocacy, and when advocating for fixing this type of tech debt, the best approach is a data-based approach. It's easy to say, "This code is messy and takes longer than it should to update," but what does that mean? A balance must exist between product, engineering, and quality. While engineering and quality may feel the pain of old code, learning how to tell that story to product can be the make-or-break difference in improving your day-to-day development. Below are some examples of data points to collect that can help you build your story and gain buy-in around the benefits of updating old code:

- Developing a new feature with the new pattern took us four sprints to complete Feature A. In our older code, the time it takes to deliver that same feature would be ten sprints, which is 2.5x the amount of time.

- Our old code limits what we can do with the new feature request you are proposing. If we were to update our old code base, in the future, we would be able to deliver A, B, and C requirements within the timeframe you are asking for. Still, today for us to deliver A, B, and C requirements, it will take us an additional three sprints to complete that work, and this will continue to be the case for all future features until we can transition that code into the new pattern.

- Because of the pattern we followed previously, we are finding three bugs every sprint that must be fixed quickly. Of our 24pt velocity, we are currently dedicating 6 points to fixing these bugs every sprint. That is 25% of our velocity being taken away by this tech debt. This means that for every four sprints we complete, one full sprint is dedicated to working on bugs due to this old code tech debt. I've done the SPIKE, and updating the old codebase will take 6 sprints. While this seems like a lot, over the next calendar year, we would spend more than 6 sprints total on fixing bugs produced by this old code and continue this pattern next year.

The key is to utilize data points to help everyone involved understand the old code's effects on the team and the company. Time = Money, so you can equate these numbers to how much money is being spent every year due to the tech debt to help tell your story. Remember, part of the PDLC and SDLC is Maintenance/Improvement. This is where tech debt falls, and we should have time to maintain and improve all features we release to customers for this exact reason.

"Quality means doing it right when no one is looking" - Henry Ford

Quality Output

Whether you're already at a company that puts quality first or at a company that seems to put quality last, quality output matters. A quality product keeps our customers returning for more. Without quality output, the company cannot survive. The high-quality work helps us to reduce bugs introduced into production so our customers have the best experience possible. So what goes into producing a high-quality output?

Quality Ownership

Quality is not a one-person job. Sure, quality engineers typically are the last person to test before a feature is released to customers, but just because they are at the end of the process does not mean they own quality alone. Everyone owns quality and can only be successful if everyone is involved in the quality processes.

- Engineering Ownership: Code Quality, Unit Test Coverage, Testable Code, Automation Triaging, Automation Maintenance for Breaking Updates

- Product Ownership: Involving Quality in Feature Planning, Planning for End-to-End Testing, Providing Detailed Requirements

- Quality Engineering Ownership: Testing Stories, End-to-End Testing Features, Building Automation, Feature Test and Strategy Planning, Establishing Quality Metrics

- Shared Ownership: Finding and Reporting Bugs, Fixing Bugs, Customer Advocacy, Adhering to Quality Gates

When we all work together to produce quality features for our customers, only then will we be highly successful. Collaboration is vital to high-quality output. While one quality engineer may be solid on the team, additional input from engineering and product will further strengthen the quality output and provide additional insight the quality engineer may not have originally had. This supports testing and automation output, increasing the ability to catch bugs before releases occur.

Escaped Bugs

Taking quality ownership a step further, you will see a bug escaping to production is not a quality engineering miss; it is a whole process miss. There are several possible failure points in this process where bugs can occur, but at a bare minimum, the bug was a miss while coding and testing. Bugs can also be a miss due to missing or unclear product requirements and can stem back to improper initial feature planning. Yes, quality engineers are the testers and are meant to catch bugs before they are released into production. Still, quality engineers are only as strong as the information provided to them.

I have experienced incidents where the entire engineering team, quality, and product have all said, "We had no idea this outcome was possible." These things happen, and we should take them as a learning experience and remember this in the future. There was no way the quality engineer could catch the bug before production because no one on the team understood this outcome could occur.

I have also experienced incidents where the quality engineer was blamed for missing a critical test the team knew needed to be tested. Once deeper conversations with the group occurred, it was discovered that those conversations never happened with the quality engineer, and it was something that the quality engineer had no previous experience testing. By not having proper conversations in grooming and planning meetings, this type of testing was missed entirely through a process failure.

We drive escaped bug numbers down by creating a safe environment for learning and establishing a quality culture where we hold everyone to the same quality standards while avoiding finger-pointing. Keeping an environment blameless and having team-based conversations about how to improve going forward is crucial to increase quality output successfully.

Quality Features

Planning

As discussed earlier in the PDLC and SDLC section, quality starts with planning. Quality and engineering should be part of the Prototyping/Requirement Gathering and Analysis phase conversations. This allows for feedback to be provided early in the process, allows for quality to start thinking through testing strategy, ask questions, and raise known risks from a quality perspective. While many companies view quality engineers only as testers and bring them in during the Development/Implementation and Testing phase, this is only a tiny part of what quality engineers do. Quality engineers can and should provide input with every feature the team builds. Quality input can influence how the feature is built and what is included in MVP. By working through this during planning, there is less scope creep and a higher chance for high-quality output.

Deadlines

Another common mistake is companies gathering data for a development deadline without considering quality testing. They may talk to architects or tech leads and get an estimated 8 weeks for development, but they don't speak to the quality engineers to learn how long testing will take. This causes false deadlines to be set and teams to be rushed. When teams are rushed, as we previously mentioned, quality is one of the first things to take a hit. This can be through lack of manual testing, lack of automation, eliminating beta phases, or eliminating end-to-end testing time. The less testing we do, the more likely bugs escape to production. While taking a hit on quality seems like the easy fix, it typically has long-term effects. Proper planning of deadlines will help to avoid this rush leading to decreased testing and decreased quality.

Bug Fixes

Planning and deadlines are significant in having time to fix bugs before releasing. However, this drastically impacts customers if it's not given the proper time and attention it needs. Fixing bugs before releases offers customers the best possible experience when the feature is released. It builds trust between the company and customers, builds credibility, and improves customer retention. Think about yourself as a customer instead of a worker. If your favorite application releases bugs every time they release, breaks login once per month, forces you to cache clear constantly, force closes on you and you're unsure why, has stale data in the system after a big update, or is always lagging after updates are made, you won't trust the company to release good code, and you'll find a better application that is worth your time and money. While working within the tech industry, we need to remember how we feel as customers, too, and what effect the company's decisions would have on us as customers. Deciding to release bugs regularly is a quick and easy way to lose customer trust and lose customers.

Training

Ok, this is all good, but how do we achieve this high-quality output? Training! The best part about this training is it can quickly come from within your company. Your quality engineers and leadership are sufficient to teach others how to work with quality in mind, instill ownership at each level, and create a culture of accountability.

I've personally done this with my team when I was an individual contributor in the past. I taught them how to test like a quality engineer, correctly write test cases, utilize our test case repository, and how I think as a quality engineer. It immensely helped the quality output of our team, automation advocacy, understanding metrics that were needed, understanding the tedious and repetitive work I was doing, and built empathy around quality practices. It helped us drive conversations around automation tools and best quality practices, overlap causing double work, and made us more efficient as a team. It also helped when I was out on PTO because the team didn't need another quality engineer to backfill me. They could temporarily self-sustain and keep projects moving with proper test documentation and coverage.

Training engineers is only one piece of the puzzle. This training should include engineering and product at a minimum. Engineering leadership, product leadership, and all individual contributors should be part of the training to help enforce quality standards. This training is also an ongoing effort. Although initial training will be your most significant lift, as things change with quality processes, quality gates, quality tools, and anything else quality-related, new training should happen with all stakeholders so everyone knows the new expectations. It's also best to remember that most of us do not automatically pick up new habits after one training, so continuous coaching will be needed as new processes and expectations are set.

"Balance is not something you find, it's something you create." - Jana Kingsford

The Balance

Tackling the build-up of existing tech debt and bugs will take a different approach than maintaining a decreased tech debt and bug count. Creating a balance between features, tech debt, and bugs will inevitably save the company time and money in the long run, and with some upfront lift that likely needs to occur, the company and course correct while settling into a new norm.

The Backlog

Going through the backlog to fix existing bugs and tech debt is not an overnight quick fix. This will likely take you 1-2 years, depending on how much build-up exists and how much time you can allocate to this work, but a couple of different approaches can be taken to work on these areas.

Sprint Balance

One approach you can take is to subdivide the work in your sprints. Set a percentage allocated for feature work, bugs, and tech debt. These percentages can look different at each company depending on how much work there is to do. Utilize data you've collected to see how many bugs for that team are opened each sprint to determine how much work needs to be done each sprint to get ahead. An example starting place could be this:

- Feature Work: 60%

- Bug Fixes: 20%

- Tech Debt: 20%

This is a relatively aggressive approach towards bugs and tech debt, but we need something more aggressive than breaking even while we catch up on the backlog of work. For a team that averages a 24pt sprint, 14pt goes towards feature development, 5pt to bugs, and 5pt to tech debt.

Quarterly Balance

A second approach you can take is to have a specific sprint set aside every quarter that only focuses on bugs and tech debt. Depending on how aggressive you need to be with bugs and tech debt, one sprint may not be enough, as this would result in 15% of all work being put toward bugs and tech debt instead of the 40% above. Still, it allows teams to stay focused on feature work without context switching to bug and tech debt work.

Tech Debt and Bug-Focused Quarter

While this is the most aggressive approach, this approach will be best suited in some situations. Suppose the backlog majority is bugs and tech debt, or the company, in general, wants to address this quickly instead of drawing it out over several quarters. In that case, there is the option to have the team(s) solely focus on fixing bugs and tech dept as the quarterly goal. This is also a good choice for more significant issues requiring complete rework of APIs, consolidating microservices, or refactoring old code bases. This may be the best approach if bugs and tech debt are causing substantial pain for the team and customers.

Future Work

When it comes to maintaining a decreased tech debt and bug count for future work, we must implement all of the practices discussed in this article. We will proceed with higher expectations and a quality-minded culture by doing so. This will lead us to reduce the amount of bugs and tech debt produced. As a recap, we need to:

- Follow the PDLC and SDLC

- Adequately plan our features

- Allow buffer time to set appropriate deadlines

- Utilize trade-offs to balance scope creep without compromising quality

- Allow time for updating old coding patterns

- Create widespread quality ownership through training and coaching

- Build cross-functional collaboration within the teams

- Set accurate deadlines and fix bugs before releases

Once the backlog is caught up, the team can also adjust sprint and quarterly balance percentages to a more sustainable balance, such as:

- Feature Work: 85%

- Bug Fixes: 5%

- Tech Debt: 10%

Tech debt and bugs will occur, and there is no way around it. As the PDLC and SDLC state, we should consistently account for time to improve and maintain our existing codebase. Still, we can significantly reduce the number of bugs and tech debt produced to be manageable instead of building up in our backlogs over months or even years. Improving the practices discussed and striving for World-Class Quality will help your company thrive, grow and retain customers, and retain employees.